Self Driving Car Software Stack is the intelligent system that empowers autonomous vehicles to navigate and operate without human intervention, and CAR-REMOTE-REPAIR.EDU.VN can help you master this technology. By understanding the software stack, professionals can enhance their skills, opening doors to advanced diagnostics and repair techniques. This article explores the self driving car technology, vehicle automation, and autonomous vehicle systems.

Contents

- 1. Understanding the Self-Driving Car Software Stack

- 1.1. Key Components of the Software Stack

- 1.1.1. Sensing

- 1.1.2. Perception

- 1.1.3. Localization

- 1.1.4. Planning

- 1.1.5. Control

- 1.2. The Importance of Sensor Fusion

- 1.3. Mobileye’s Road Experience Management™ (REM™)

- 2. The Role of Artificial Intelligence in Self-Driving Cars

- 2.1. Computer Vision

- 2.2. Machine Learning

- 2.3. Deep Learning

- 3. How Self-Driving Cars Perceive Their Environment

- 3.1. Cameras

- 3.2. Radar

- 3.3. Lidar

- 4. Localization and Mapping in Autonomous Vehicles

- 4.1. GPS Limitations

- 4.2. High-Definition Maps

- 4.3. Sensor Fusion for Localization

- 5. Planning and Decision-Making in Autonomous Vehicles

- 5.1. Route Planning

- 5.2. Motion Planning

- 5.3. Collision Avoidance

- 5.4. Behavioral Planning

- 6. Control Systems in Self-Driving Cars

- 6.1. Brake Control

- 6.2. Steering Control

- 6.3. Acceleration Control

- 7. Challenges and Future Trends in Self-Driving Car Software

- 7.1. Safety Concerns

- 7.2. Reliability Issues

- 7.3. Regulatory Challenges

- 7.4. Future Trends

- 8. Training and Skill Development for Automotive Technicians

- 8.1. The Need for Specialized Training

- 8.2. CAR-REMOTE-REPAIR.EDU.VN Training Programs

- 8.3. Benefits of Remote Training

- 9. The Future of Automotive Repair with Remote Diagnostics

- 9.1. How Remote Diagnostics Works

- 9.2. Benefits of Remote Diagnostics

- 9.3. Remote Diagnostics for Self-Driving Cars

- 10. Case Studies: Successful Applications of Self-Driving Car Technology

- 10.1. Autonomous Taxis

- 10.2. Delivery Vehicles

- 10.3. Long-Haul Trucking

- 10.4. Agricultural Applications

- FAQ: Frequently Asked Questions About Self Driving Car Software Stack

- 1. What is a self driving car software stack?

- 2. What are the key components of a self driving car software stack?

- 3. How does sensor fusion enhance self driving car capabilities?

- 4. What role does artificial intelligence (AI) play in self driving cars?

- 5. How do self driving cars perceive their environment?

- 6. What are the limitations of GPS in autonomous vehicle localization?

- 7. What are the main challenges in developing self driving car software?

- 8. Why is specialized training needed for automotive technicians in self driving car technology?

- 9. How does remote diagnostics benefit the repair of self driving cars?

- 10. What are some future trends in self driving car software development?

1. Understanding the Self-Driving Car Software Stack

The self-driving car software stack is the comprehensive set of software components that enable a vehicle to perceive its environment, plan routes, and control its movements autonomously. According to a 2023 report by the National Highway Traffic Safety Administration (NHTSA), advanced driver-assistance systems (ADAS) are becoming increasingly prevalent, laying the groundwork for fully autonomous driving. This stack can be divided into several functional layers, each responsible for a specific aspect of autonomous driving.

1.1. Key Components of the Software Stack

The self-driving car software stack includes several key components, such as sensing, perception, localization, planning, and control. Each layer plays a crucial role in enabling the vehicle to operate autonomously.

1.1.1. Sensing

The sensing layer is responsible for gathering data about the vehicle’s surroundings using various sensors. According to research from the Massachusetts Institute of Technology (MIT), Department of Mechanical Engineering, in July 2023, advanced sensor technologies significantly enhance the reliability of autonomous driving systems. These sensors include cameras, radar, and lidar.

- Cameras: Capture visual information, detecting lane markers, traffic signs, and other vehicles. Cameras are superior in detecting colors and shapes, making them ideal for identifying various objects in the driving environment.

- Radar: Works reliably in low-visibility conditions, such as rain, snow, and fog. Radar is effective at detecting the presence and distance of objects, even when visibility is poor.

- Lidar: Uses laser light pulses to create a 3D map of the environment. Lidar provides highly accurate distance measurements and detailed environmental mapping.

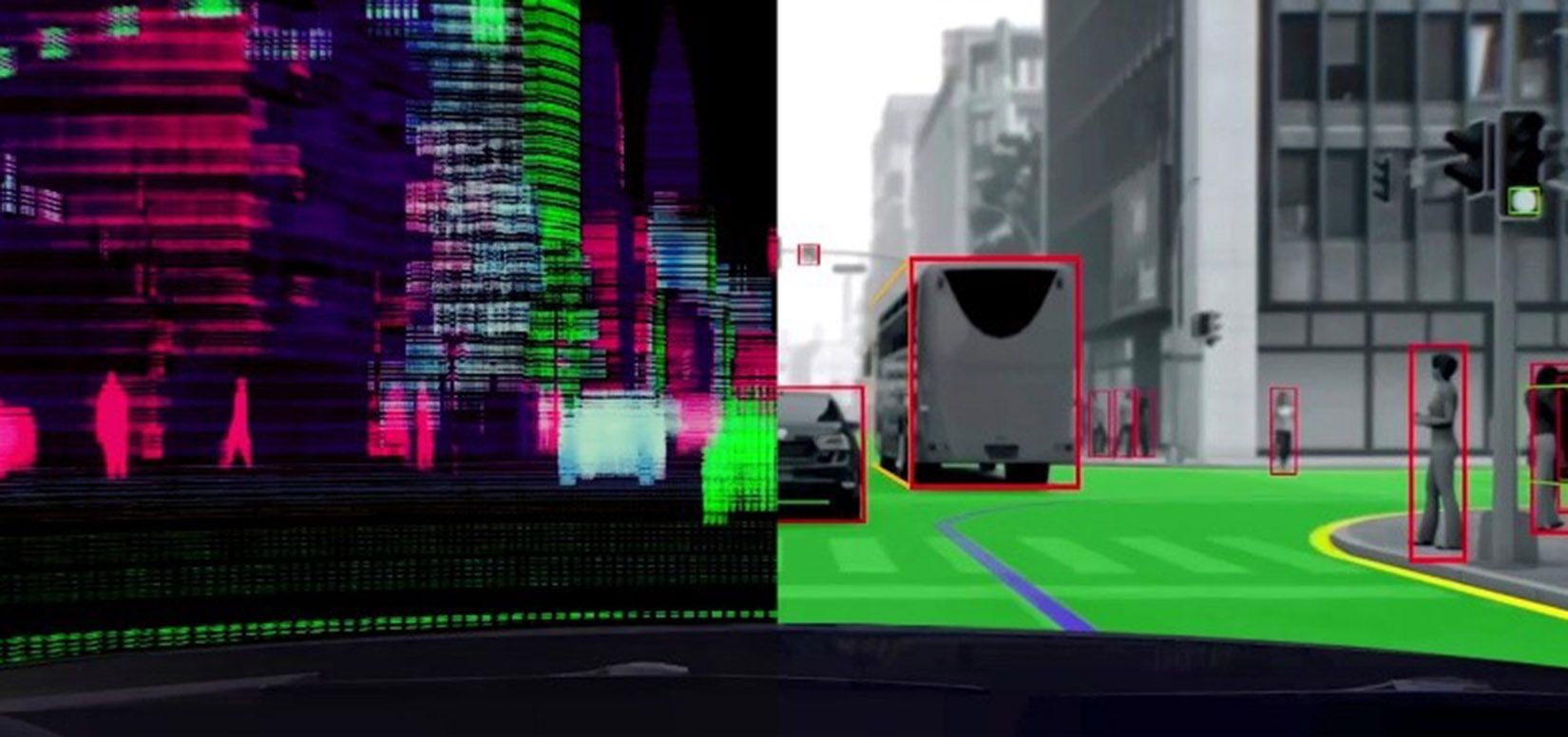

Self-driving cars use multiple sensors, including cameras, radar, and lidar, to perceive their surroundings and navigate autonomously.

Self-driving cars use multiple sensors, including cameras, radar, and lidar, to perceive their surroundings and navigate autonomously.

1.1.2. Perception

The perception layer processes the sensor data to understand the environment. Computer vision algorithms are applied to detect lane markings, street signs, traffic lights, and other objects. According to a study by Stanford University’s Artificial Intelligence Laboratory in June 2024, machine learning algorithms are critical for improving the accuracy and reliability of object detection in autonomous vehicles.

- Object Recognition: Identifies and categorizes objects, such as cars, pedestrians, and cyclists.

- Pattern Recognition: Detects patterns in sensor data to identify specific situations, such as open car doors or hand signals from traffic police.

- Clustering Algorithms: Groups similar data points together to identify distinct objects or regions.

1.1.3. Localization

Localization is the process of determining the vehicle’s precise location relative to its surroundings. GPS is commonly used for initial localization, but it has limitations in terms of accuracy. According to a report by the University of Michigan’s Transportation Research Institute in August 2023, high-definition maps and sensor fusion techniques are essential for achieving centimeter-level accuracy in localization.

- GPS: Provides initial location information but is often supplemented by other sensors for greater accuracy.

- Inertial Sensors: Measure the vehicle’s acceleration and orientation to provide precise location data.

- High-Definition Maps: Contain detailed information about the road environment, allowing the vehicle to accurately determine its position.

1.1.4. Planning

The planning layer makes decisions about the vehicle’s route and actions based on the perceived environment and the vehicle’s location. This involves route planning, motion planning, collision avoidance, and behavioral planning. According to research from Carnegie Mellon University’s Robotics Institute in May 2024, advanced planning algorithms can significantly improve the safety and efficiency of autonomous driving.

- Route Planning: Determines the optimal route to reach the destination, taking into account traffic conditions and road closures.

- Motion Planning: Makes decisions about lane changes, merges, and other maneuvers.

- Collision Avoidance: Ensures the vehicle avoids moving and static objects on the roadway.

- Behavioral Planning: Takes into account the likely behavior of other road users.

1.1.5. Control

The control layer executes the planned actions by controlling the vehicle’s brakes, steering wheel, accelerator, and other components. According to a study by the University of California, Berkeley’s Partners for Advanced Transportation Technology (PATH) program in April 2023, precise control systems are crucial for ensuring smooth and safe autonomous driving.

- Brake Control: Regulates the braking system to slow down or stop the vehicle.

- Steering Control: Manages the steering wheel to maintain the vehicle’s course.

- Acceleration Control: Adjusts the accelerator to control the vehicle’s speed.

1.2. The Importance of Sensor Fusion

Sensor fusion is the process of combining data from multiple sensors to create a comprehensive view of the vehicle’s surroundings. There are two main types of sensor fusion: early and late.

- Early Sensor Fusion: Combines raw data from sensors before applying object detection algorithms.

- Late Sensor Fusion: Applies object detection algorithms to the data before combining the resulting 3D maps.

Mobileye’s self-driving system uses late sensor fusion and sensor redundancy to create independent models of the road environment. This approach enhances the reliability and robustness of the autonomous driving system.

1.3. Mobileye’s Road Experience Management™ (REM™)

Mobileye’s Road Experience Management™ (REM™) uses anonymous data crowdsourced from around the world to create dynamic, continuously updated maps. These maps capture how drivers use roads and the environment around them, providing a richness of map semantics.

2. The Role of Artificial Intelligence in Self-Driving Cars

Artificial intelligence (AI) is a critical component of the self-driving car software stack. AI algorithms are used for perception, localization, planning, and control. According to a 2024 report by McKinsey & Company, AI is expected to drive significant advancements in autonomous driving technology over the next decade.

2.1. Computer Vision

Computer vision is a subfield of AI that focuses on enabling computers to “see” and interpret images. In self-driving cars, computer vision algorithms are used to detect lane markings, street signs, traffic lights, and other objects.

2.2. Machine Learning

Machine learning is a type of AI that allows computers to learn from data without being explicitly programmed. Machine learning algorithms are used to improve the accuracy and reliability of object detection, prediction, and decision-making in autonomous vehicles.

2.3. Deep Learning

Deep learning is a subset of machine learning that uses artificial neural networks with multiple layers to analyze data. Deep learning algorithms have shown remarkable performance in image recognition, natural language processing, and other tasks.

3. How Self-Driving Cars Perceive Their Environment

Self-driving cars use a variety of sensors to perceive their environment. These sensors include cameras, radar, and lidar.

3.1. Cameras

Cameras capture visual information, allowing the car to “see” its surroundings. Cameras are used to detect lane markings, traffic signs, and other vehicles.

3.2. Radar

Radar uses radio waves to detect the presence and distance of objects. Radar works reliably in low-visibility conditions, such as rain, snow, and fog.

3.3. Lidar

Lidar uses laser light pulses to create a 3D map of the environment. Lidar provides highly accurate distance measurements and detailed environmental mapping.

4. Localization and Mapping in Autonomous Vehicles

Localization and mapping are essential for enabling self-driving cars to navigate their environment. Localization is the process of determining the vehicle’s precise location, while mapping involves creating a detailed representation of the environment.

4.1. GPS Limitations

GPS is commonly used for initial localization, but it has limitations in terms of accuracy, especially in urban environments with tall buildings that can interfere with satellite signals.

4.2. High-Definition Maps

High-definition maps contain exact information about the location of static features of the road, such as curbs and pedestrian crossings. This allows the vehicle to more accurately determine its position relative to the road.

4.3. Sensor Fusion for Localization

Sensor fusion combines data from various sensors, such as inertial sensors, cameras, lidar, and radar, to provide more precise location information.

5. Planning and Decision-Making in Autonomous Vehicles

The planning layer of the self-driving car software stack is responsible for making decisions about the vehicle’s route and actions. This involves route planning, motion planning, collision avoidance, and behavioral planning.

5.1. Route Planning

Route planning determines the optimal route to reach the destination, taking into account traffic conditions and road closures.

5.2. Motion Planning

Motion planning makes decisions about lane changes, merges, and other maneuvers.

5.3. Collision Avoidance

Collision avoidance ensures the vehicle avoids moving and static objects on the roadway.

5.4. Behavioral Planning

Behavioral planning takes into account the likely behavior of other road users.

6. Control Systems in Self-Driving Cars

The control layer executes the planned actions by controlling the vehicle’s brakes, steering wheel, accelerator, and other components.

6.1. Brake Control

Brake control regulates the braking system to slow down or stop the vehicle.

6.2. Steering Control

Steering control manages the steering wheel to maintain the vehicle’s course.

6.3. Acceleration Control

Acceleration control adjusts the accelerator to control the vehicle’s speed.

7. Challenges and Future Trends in Self-Driving Car Software

The development of self-driving car software faces several challenges, including safety, reliability, and regulatory issues. However, ongoing research and development efforts are addressing these challenges and paving the way for future advancements.

7.1. Safety Concerns

Ensuring the safety of self-driving cars is a top priority. This requires rigorous testing and validation of the software and hardware components.

7.2. Reliability Issues

Self-driving cars must be reliable in a wide range of driving conditions. This requires robust sensor systems, accurate localization, and effective planning algorithms.

7.3. Regulatory Challenges

The regulatory landscape for self-driving cars is still evolving. Clear and consistent regulations are needed to ensure the safe and responsible deployment of autonomous vehicles.

7.4. Future Trends

Future trends in self-driving car software include:

- Advancements in AI: AI algorithms will continue to improve the accuracy and reliability of perception, localization, planning, and control.

- Improved Sensor Technology: New sensor technologies, such as solid-state lidar and advanced radar systems, will provide more detailed and accurate information about the environment.

- Enhanced Connectivity: Vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) communication will enable self-driving cars to share information and coordinate their movements.

8. Training and Skill Development for Automotive Technicians

As self-driving technology becomes more prevalent, automotive technicians need to develop new skills to diagnose and repair these advanced systems. CAR-REMOTE-REPAIR.EDU.VN offers comprehensive training programs to help technicians stay ahead of the curve.

8.1. The Need for Specialized Training

Traditional automotive training programs do not adequately prepare technicians for the complexities of self-driving car software. Specialized training is needed to understand the software stack, diagnose issues, and perform repairs.

8.2. CAR-REMOTE-REPAIR.EDU.VN Training Programs

CAR-REMOTE-REPAIR.EDU.VN offers a range of training programs designed to equip automotive technicians with the skills they need to work on self-driving cars. These programs cover topics such as:

- Sensor Technology: Understanding the principles and operation of cameras, radar, and lidar.

- Computer Vision: Learning how computer vision algorithms are used to detect and classify objects.

- Localization and Mapping: Understanding the techniques used to determine the vehicle’s precise location.

- Planning and Control: Learning how the vehicle makes decisions and controls its movements.

- Diagnostics and Repair: Developing the skills to diagnose and repair issues with the self-driving car software stack.

8.3. Benefits of Remote Training

Remote training offers several benefits, including:

- Flexibility: Technicians can complete training at their own pace and on their own schedule.

- Accessibility: Remote training is accessible to technicians anywhere in the world.

- Cost-Effectiveness: Remote training can be more affordable than traditional classroom-based training.

Address: 1700 W Irving Park Rd, Chicago, IL 60613, United States.

Whatsapp: +1 (641) 206-8880.

Website: CAR-REMOTE-REPAIR.EDU.VN.

9. The Future of Automotive Repair with Remote Diagnostics

Remote diagnostics is transforming the automotive repair industry, allowing technicians to diagnose and repair vehicles from a remote location. This technology is particularly valuable for self-driving cars, which can be complex and difficult to diagnose.

9.1. How Remote Diagnostics Works

Remote diagnostics uses telematics data and remote access tools to diagnose issues with the vehicle’s software and hardware. Technicians can remotely access the vehicle’s computer systems, read diagnostic codes, and perform tests.

9.2. Benefits of Remote Diagnostics

Remote diagnostics offers several benefits, including:

- Faster Diagnosis: Technicians can quickly diagnose issues without having to physically inspect the vehicle.

- Reduced Downtime: Remote diagnostics can help reduce the amount of time the vehicle is out of service.

- Cost Savings: Remote diagnostics can save on labor costs and reduce the need for expensive equipment.

9.3. Remote Diagnostics for Self-Driving Cars

Remote diagnostics is particularly valuable for self-driving cars, which can have complex software and hardware systems. Technicians can use remote diagnostics to identify and resolve issues with the self-driving car software stack, ensuring the vehicle operates safely and reliably.

10. Case Studies: Successful Applications of Self-Driving Car Technology

Self-driving car technology is being used in a variety of applications, including:

10.1. Autonomous Taxis

Autonomous taxis are being tested in several cities around the world. These taxis use self-driving car technology to transport passengers without a human driver.

10.2. Delivery Vehicles

Self-driving delivery vehicles are being used to transport goods and packages. These vehicles can operate 24/7, reducing delivery times and costs.

10.3. Long-Haul Trucking

Self-driving trucks are being tested for long-haul trucking applications. These trucks can improve fuel efficiency and reduce driver fatigue.

10.4. Agricultural Applications

Self-driving tractors and other agricultural vehicles are being used to automate farming tasks. These vehicles can improve efficiency and reduce labor costs.

FAQ: Frequently Asked Questions About Self Driving Car Software Stack

1. What is a self driving car software stack?

A self driving car software stack is the set of software components that enables a vehicle to perceive its environment, plan routes, and control its movements autonomously. It includes sensing, perception, localization, planning, and control layers.

2. What are the key components of a self driving car software stack?

The key components are sensing (cameras, radar, lidar), perception (object recognition, pattern recognition), localization (GPS, inertial sensors, high-definition maps), planning (route planning, motion planning, collision avoidance), and control (brake, steering, and acceleration control).

3. How does sensor fusion enhance self driving car capabilities?

Sensor fusion combines data from multiple sensors to create a comprehensive view of the vehicle’s surroundings, enhancing reliability and robustness in autonomous driving. It can be early (raw data fusion) or late (fusing 3D maps).

4. What role does artificial intelligence (AI) play in self driving cars?

AI is critical for perception, localization, planning, and control. Computer vision helps interpret images, while machine learning and deep learning improve accuracy and decision-making.

5. How do self driving cars perceive their environment?

Self driving cars use cameras for visual information, radar for distance and presence detection in low visibility, and lidar to create 3D maps of the environment.

6. What are the limitations of GPS in autonomous vehicle localization?

GPS accuracy is limited, especially in urban environments with tall buildings that interfere with satellite signals. High-definition maps and sensor fusion techniques improve localization accuracy.

7. What are the main challenges in developing self driving car software?

Challenges include ensuring safety and reliability, addressing regulatory issues, and continuously improving sensor technology and AI algorithms.

8. Why is specialized training needed for automotive technicians in self driving car technology?

Specialized training is necessary to understand the complexities of self driving car software, diagnose issues, and perform repairs effectively, as traditional automotive training programs are inadequate.

9. How does remote diagnostics benefit the repair of self driving cars?

Remote diagnostics enables faster diagnosis, reduced downtime, and cost savings by allowing technicians to access and diagnose vehicle systems remotely, which is particularly valuable for complex self driving cars.

10. What are some future trends in self driving car software development?

Future trends include advancements in AI, improved sensor technology, enhanced connectivity through V2V and V2I communication, and the continuous refinement of safety and reliability measures.

Self driving car technology is rapidly evolving, offering numerous opportunities for innovation and advancement. By understanding the self driving car software stack and investing in training and skill development, automotive technicians can position themselves for success in this exciting field. CAR-REMOTE-REPAIR.EDU.VN is your trusted partner in navigating the future of automotive repair.

Ready to elevate your automotive repair skills and dive into the world of self-driving cars? Visit CAR-REMOTE-REPAIR.EDU.VN today to explore our comprehensive training programs and remote support services. Gain the expertise you need to excel in the rapidly evolving automotive industry. Don’t wait – transform your career now with CAR-REMOTE-REPAIR.EDU.VN!